Funded Projects

Active Projects

Hybrid Intelligence for Advanced Collective Awarness and Decision Making in Complex Urban Environments (HIDDEN)

Urban mobility poses various challenges to Cooperative Connected and Automated Mobility (CCAM) systems. Timely and reliable detection of occluded objects, especially vulnerable road users, is critical. To tackle this challenge, HIDDEN is developing advanced collective awareness and decision making algorithms, with or without road infrastructure support. HIDDEN deploys Hybrid Intelligence techniques across the whole chain to benefit from the combination of human and machine intelligence. HIDDEN is developing CCAM systems that are not just technologically advanced but also deeply aligned with human driving styles, ethical principles, and regulations, setting a new benchmark for the future of automated vehicles.

|

Funded by the European Union Horizon Europe program, call HORIZON-CL5-2024-D6-01-04: (CCAM Partnership), 2025 – 2028. |

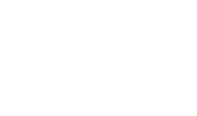

AI-based System Architectures for Autonomous Driving

As the AI revolution continues to be fueled by advances in deep learning, there is a technological paradigm shift in autonomous driving from hand-engineered model-based approaches towards end-to-end trainable data-driven approaches. In this project, we aim to leverage the full potential of modern AI technology by decomposing the interfaces between the individual components in the autonomous driving stack and, thus, enable a joint optimization of the overall system. We explore the proposed “AI first” autonomous driving stack by subdividing it into three branches related to perception, planning, and architecture design/optimization, each containing several individual projects.

| |

Funded by industry sponsor 2023-2026. |

CRC 1597 Small Data Project - Essentials For Few-Shot Learning

The Collaborative Research Center (CRC) 'Small Data' integrates contributions from computer science, mathematics, and statistical modeling, with input from biomedicine. In this project, we aim to develop a concise and solid framework that generalizes to diverse applications. Specifically, we will develop novel core algorithms for few-shot learning, including analysis of the properties of base representations, matching strategies, generative techniques to learn residual features for distinguishing new objects, and techniques for incrementally learning new classes without forgetting previous knowledge. More...

|

Funded by the German Research Foundation (DFG) 2023-2027. |

Autonomous Robust Outdoor Robots (A-ROR)

A-ROR aims to develop robotic technologies that can perform a wide range of tasks in arable farming. The goal is to enable the use of robots in an economically sustainable way in agriculture and exploit the advantages that come with autonomous systems. To achieve this, we are developing improved hardware technologies, sensors, and supporting algorithms for perception and localization. This is particularly necessary in adverse conditions in the field, such as heavily soaked and thus muddy soil. We are also developing a special safety sensor technology in conjunction with suitable control strategies for increased maneuverability and preventing unintentional failures of the system, while at the same time increasing functional safety.

|

Funded by the Baden-Württemberg Foundation, call Autonomous Robotics 2023-2025. |

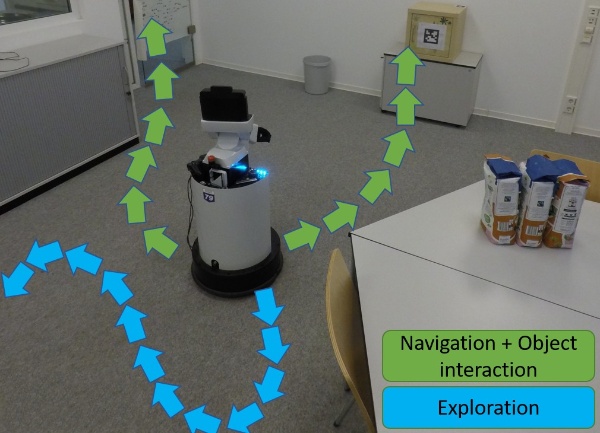

Learning Long Horizon Mobile Manipulation

Mobile manipulation is key for both robotic assistants as well as flexible automation processes and warehouse logistics. The goal of this project is to innovate reliable decision making and action execution algorithms for long-horizon mobile manipulation. In this context, we pursue several interrelated approaches towards the goal of scalable learning for autonomous robots in complex environments. From the high-level perspective, we aim to develop hierarchical approaches that learn which actions should be performed and when to explore. From the low-level perspective, we are pursuing complementary tasks in reinforcement and interactive learning with langugage for mobile manipulation actions.

| |

Funded by industry sponsor 2023-2025. |

Zuse School ELIZA

The Konrad Zuse School of Excellence in Learning and Intelligent Systems (ELIZA) is a graduate school in the field of artificial intelligence. ELIZA’s research and training activities focus on four main areas: the basics of machine learning — including ML-driven fields like computer vision or robot learning —, machine learning systems, applications in autonomous systems, as well as trans-disciplinary applications for machine learning in other scientific fields, from life sciences to physics. The graduate school offers students a combination of excellent, research-based education at the Master’s and doctoral level and networking opportunities across different sites. More...

|

Funded by the Federal Ministry of Education and Research (BMBF) through the German Academic Exchange Service (DAAD) 2022-2027. |

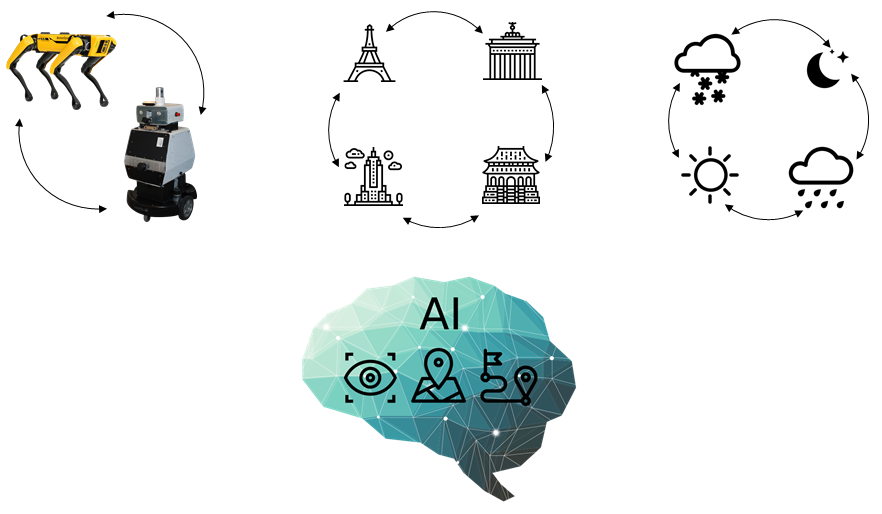

Efficient Learning for Transferable Robot Autonomy in Human-Centered Environments (Autonomy@Scale)

Tremendous progress in advancing the capabilities of autonomous robots in the last decade has enabled impressive results in the context of robot navigation in urban environments. However, most robots today still avoid being in the vicinity of humans and corresponding autonomy methods lack the generalization ability as well as the robustness required for ubiquitous service applications. The goal of this project is to develop data-efficient and transferable learning methods for fundamental autonomy tasks that enable mobile service robots to navigate in thus far unknown urban outdoor environments among humans.

|

Funded by German Research Foundation (DFG), Emmy Noether Programme AI initiative 2023-2028. |

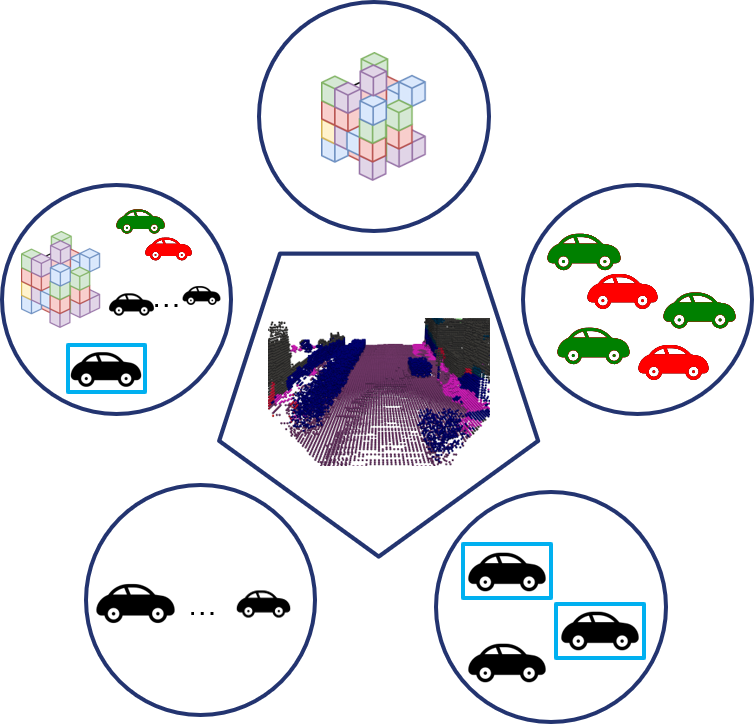

Multimodal Architectures for Joint Perception, Prediction, and Planning

With the rapid expansion of autonomous vehicles to multiple locations around the world, there is an increasing need for a scalable and data-efficient solution for processing onboard sensor data. This project thus aims to develop a holistic framework to perform multiple 3D perception tasks using data from a variety of input sensor modalities. Specifically, this framework leverages techniques from self-supervised representation learning to perform the sequential tasks of perception, planning, and prediction in a fully modular and label-efficient manner, thus enabling quick integration of newer solutions as well as promoting scalability across multiple domains.

| |

Funded by industry sponsor 2022-2025. |

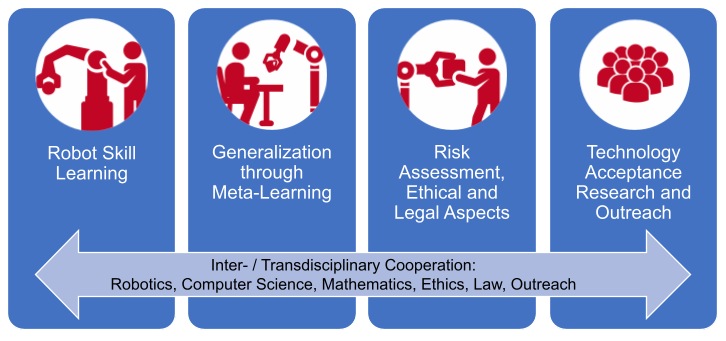

Responsible and Scalable Learning for Robots Assisting Humans (ReScaLe)

AI-based robots are envisioned to support numerous tasks in our society, such as assisting humans during their everyday life or making production processes more efficient. However, programming such robots is an extremely time-consuming task and requires engineers with a substantial amount of expertise. A promising solution to this problem is to learn tasks from humans through interacting with them. This project aims at enabling robots to efficiently learn tasks from humans through demonstrations by using innovative machine learning methods while at the same time developing ethical and legal models that ensure safe, trustworthy and responsible human-robot interactions. More...

|

Funded by Carl Zeiss Foundation, call Scientific Breakthroughs in Artificial Intelligence 2022-2028. |

Completed Projects

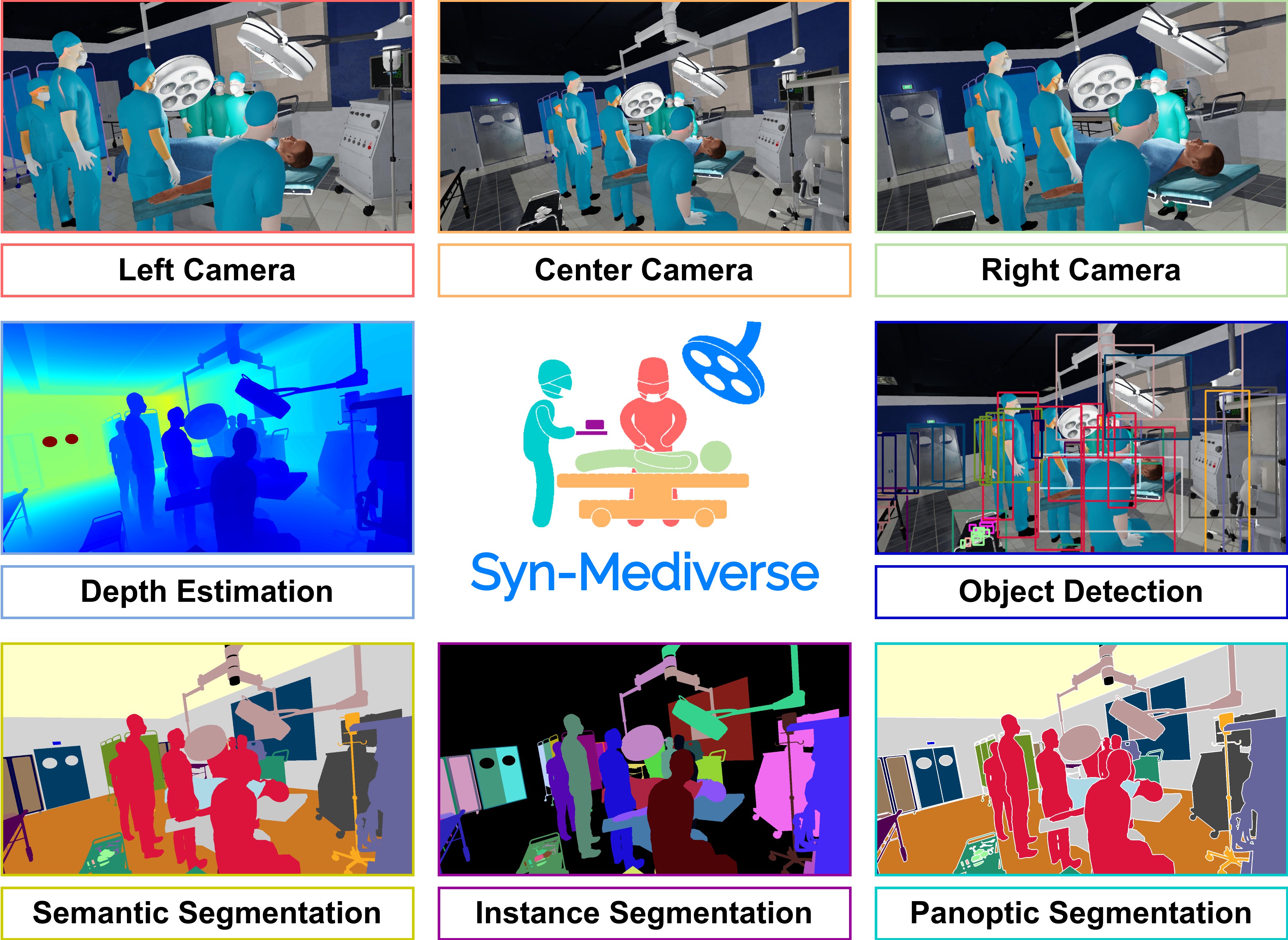

Intelligent Scene Understanding of Healthcare Facilities

Scene understanding of healthcare facilities is a critically essential task for fully automated robotic surgery systems. This enables a wide range of capabilities such as advanced guidance, automation of surgical tasks, surgical workflow analysis, among others. In this project, we will develop novel deep learning algorithms for reliable instance segmentation, tracking, and keypoint estimation of the surgical team from operating room video streams. To enable coherent evaluation of such methods, we will develop a novel benchmark for the aforementioned tasks. We will perform extensive experiments to demonstrate the effectiveness of the proposed system in real-world environments.

| |

Funded by industry sponsor 2020-2025. |

Robust Visual SLAM for Unstructured Environments

Vision foundation models have gained a lot of attention in recent years, due to their ability to learn strong representations and generalize well to novel tasks. For this reason, they have been used as feature extractors for a wide range of tasks such as semantic and panoptic segmentation. More recently, some works have investigated the use of these models for visual place recognition, showing strong generalization abilities to a wide range of environments. However, only a few works have explored leveraging these models for visual feature tracking. In the scope of this collaboration, we investigate leveraging vision foundation models for feature tracking in unstructured environments, with the aim of leveraging these features for both loop closure detection and visual odometry estimation.

| |

Funded by industry sponsor 2024-2025. |

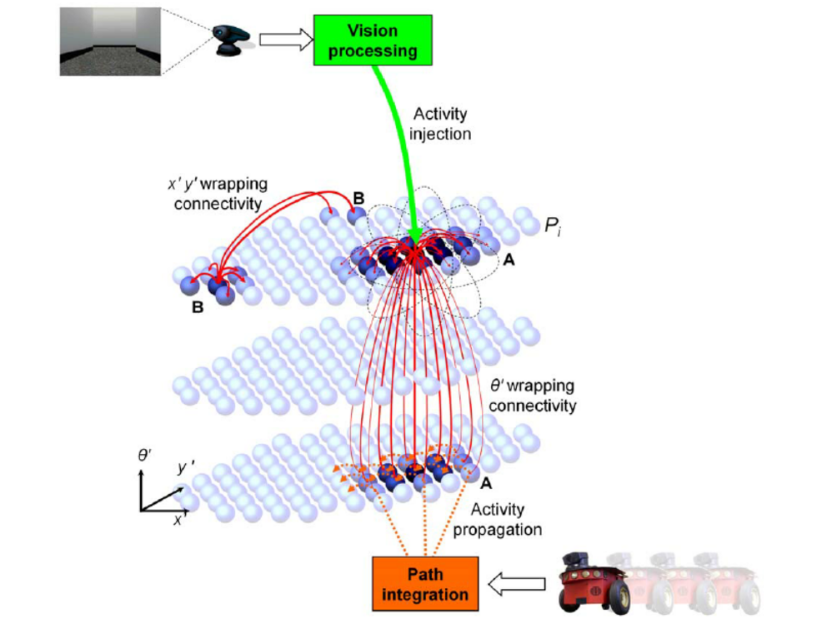

Learning Multisensory Integration for Neural Circuit Modeling (HippoSLAM)

Higher-level cognitive functions such as navigation and decision making rely on neural representations of abstract state spaces combining sensory, contextual, emotional, and mnemonic information. Existing neural architecture are not well-grounded in neural anatomy and physiology, thus making it difficult to interpret these results from a neuroscience perspective. Moreover, these models act on highly processed sensory information which restricts evaluations to simple toy examples. In this project, we will carry out the endeavor of evaluating hippocampal circuit models for real-world robot navigation using machine learning methods that learn sensory representations which will then be used for more biologically motivated models to operate on. More...

|

Funded by the Federal Ministry of Economics, Science and Arts of Baden-Württemberg through the Cluster of Excellence BrainLinks-BrainTools, 2023-2024. |

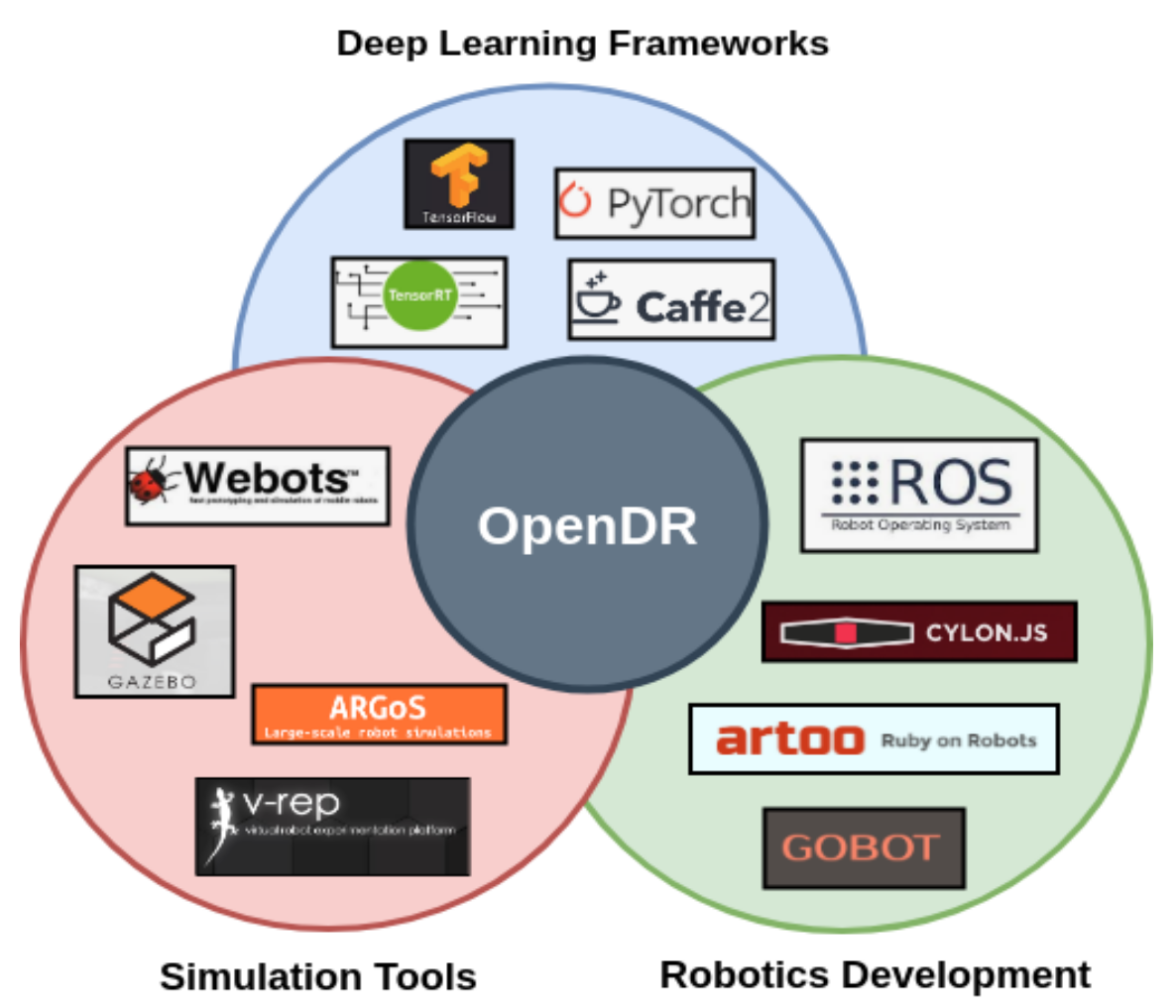

Open Deep Learning Toolkit for Robotics (OpenDR)

The aim of OpenDR is to develop a modular, open and non-proprietary deep learning toolkit for robotics. We will provide a set of software functions, packages and utilities to help roboticists develop and test robotic applications that incorporate deep learning. OpenDR will enable linking robotics applications to software libraries such as TensorFlow and the ROS operating environment. We focus on the AI and cognition core technology in order to give robotic systems the ability to interact with people and environments by means of deep-learning methods for active perception, cognition, and decisions making. OpenDR will enlarge the range of robotics applications making use of deep learning, which will be demonstrated in the applications areas of healthcare, agri-food, and agile production. More...

|

Funded by the European Union Horizon 2020 program, call H2020-ICT-2018-2020 (Information and Communication Technologies), 2020 – 2023. |

Brain Controlled Service Robots (ServiceBots)

In this project, the principles of interaction between the brain and novel autonomous robotic systems are being investigated. More specifically, robotic systems controlled by brain-machine interfaces are being developed to perform service tasks for paralyzed users. In this context, the focus is on the following research problems: Learning New Robotic Skills from Multimodal Brain Signal Feedback, Learning for Human-Robot Interaction, and Joint Learning of Navigation and Manipulation Tasks. As a large amount of interaction data is required for learning, an approach with several identical robots that collect and aggregate data in parallel is being exploited along with the motion capture system that provides pose information. This will enable robots to learn skills in the everyday environment within a reasonable time. More...

|

Funded by the Federal Ministry of Economics, Science and Arts of Baden-Württemberg through the Cluster of Excellence BrainLinks-BrainTools, 2020-2023. |

Sensor Systems for Localization of Trapped Victims in Collapsed Infrastructure (SORTIE)

The primary research objective is to improve the detection of trapped victims in collapsed infrastructure with respect to sensitivity, reliability, speed, and safety of the responders. In this project, we will develop an autonomous unmanned aerial vehicle (UAV) system that can augment the process of urban search and rescue and that helps limiting the severe impacts of the disaster scenario. This will be enabled by combining sensing principles such as bioradiolocation, cellphone localization, gas detection, and 3D mapping into a single unit. The seamless combination of intelligent UAVs equipped with the multi-sensor system will allow the rescue management team to quickly assess the situation in order to initiate rescue procedures as fast as possible. More...

|

Funded by the German Federal Ministry of Education and Research (BMBF), call International Disaster and Risk Management (IKARIM), 2020-2023. |

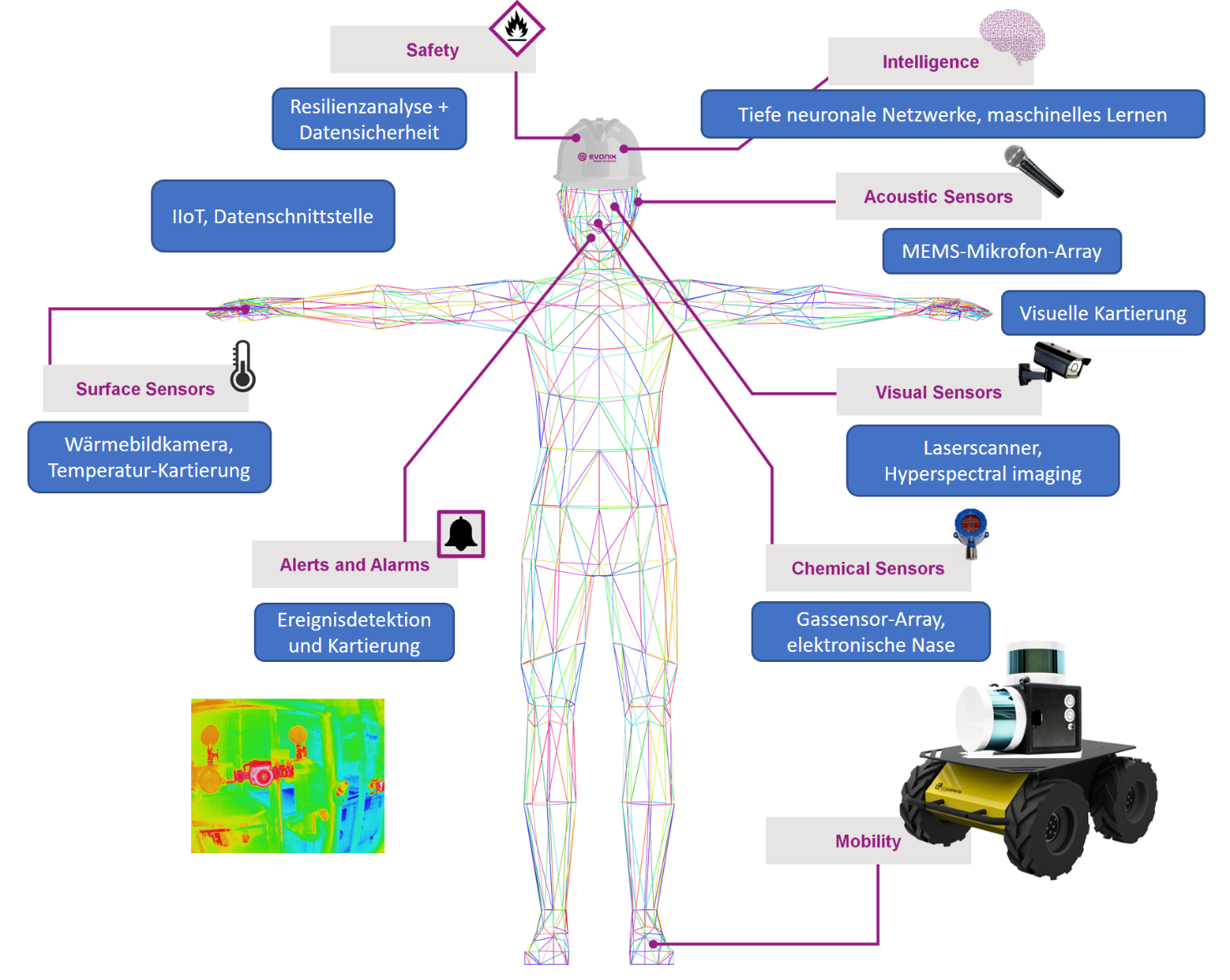

Intelligent Sensor System for Autonomous Monitoring of Production Plants in Industry 4.0 (ISA 4.0)

The ISA 4.0 project aims at developing a new mobile surveillance system for industrial plants. The goal is to support industry inspectors by taking over inspection tours and automating the inspection process. For this purpose, a mobile intelligent sensor system is being developed that depicts human senses, and analyzes the information autonomously gathered by the robot with machine learning methods. The human senses "hearing, smell, taste, sight and touch", approximately correspond to the sensing capability of microphones, gas sensors, chemical sensors, imaging systems, vibration sensors, and temperature sensors. The autonomous system equipped with these sensors is intended to map and carry out inspection tours and will thereby enable an extended, improved and more objective system diagnosis. More...

|

Funded by the German Federal Ministry of Education and Research (BMBF), call The Federal Government's Framework Program for Research and Innovation, 2020-2023. |

From Learning to Relearning Algorithmic Fairness for Deterring Biased Outcomes in Socially-Aware Robot Navigation (Robots4SocialGood)

Humans have the ability to learn and relearn where we adapt to physical changes in the environment, as well as adapt to mitigate actions to diminish prejudiced or inequitable conduct. We are investigating the key elements that are required to articulate both technological and social fields to mitigate socially-biased outcomes while learning socially-aware navigation strategies. With this interdisciplinary perspective, we are exploring social implications of including fairness measurements into learning techniques, considering ethical elements that pay attention to underrepresented groups of people as a support task for initiatives for inclusion and fairness in AI and robotics. This would lead to the rise of robots that positively influence society through the projection of more equitable social relationships, roles, and dynamics. More...

|

Funded by the Federal Ministry of Economics, Science and Arts of Baden-Württemberg through the Cluster of Excellence BrainLinks-BrainTools, 2020-2022. |

Embodied Cognitive Robotics (ECBots)

A long-standing vision of robotics has been to create autonomous robots that can learn from the world around them and assist humans in performing a variety tasks including in our homes, in transportation settings, in healthcare as well as in dangerous situations. However, most robots today are confined to operate in carefully engineered factory settings or in applications where the amount of understanding that they need about their surroundings is fairly limited. The focus of this project is to push the state-of-the-art in uncertainty-aware active learning, self-supervised learning, and learning from interaction as well as navigation. Advancing these techniques is a strong starting point that will enable robots to continuously learn multiple tasks from what they perceive and experience by interacting with the real-world.

|

Funded by Eva Mayr-Stihl Foundation, 2020-2022. |