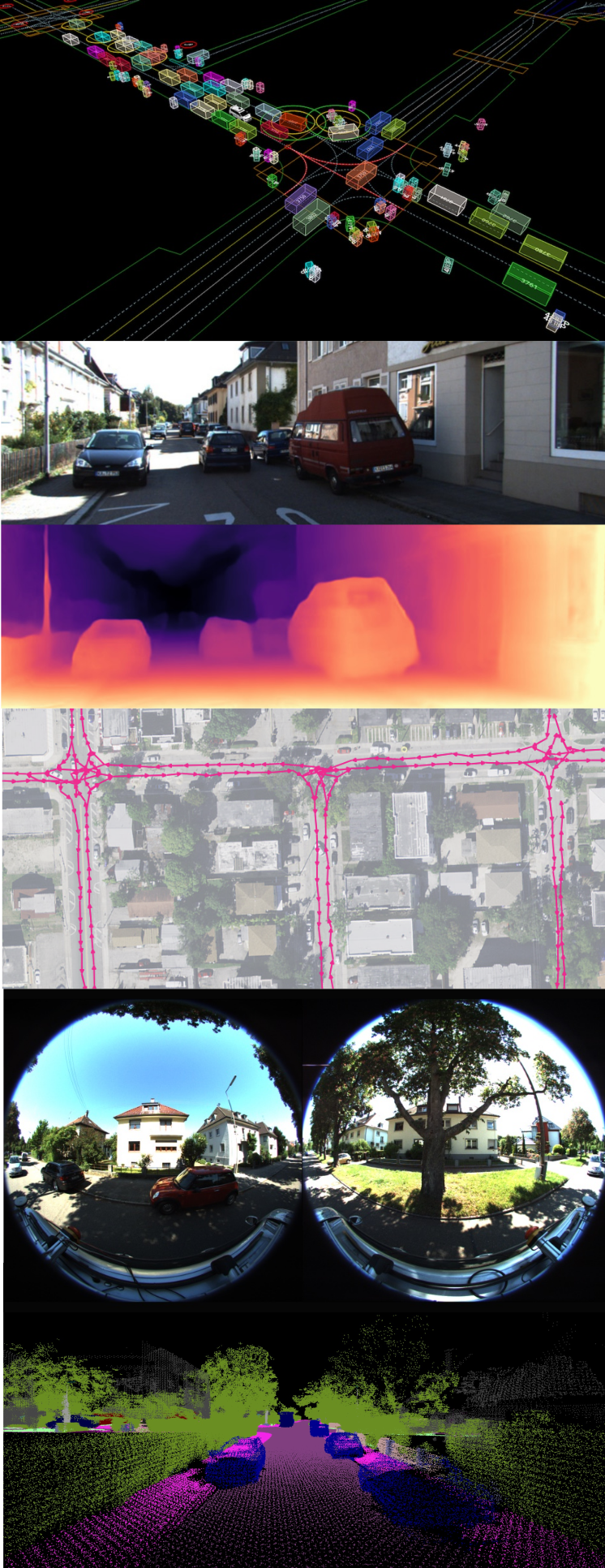

The Robot Learning Lab organizes a bi-weekly reading group (no ECTS) on Autonomy for Mobility in cooperation with the Autonomous Intelligent Systems Lab. Here we discuss recent publications on the field as well as work in progress in our groups. The main focus of this reading group is the area of state estimation and perception for autonomous navigation scenarios. Speakers are the PhD students of the AIS and RL labs.

The format includes the following modes:

- Paper discussion: Discussion of a recent paper that might interest our groups. The discussion could entail a presentation of the paper PDF with your own annotations to support your thoughts. You can go through the paper itself incl annotations, use paper videos/data, and use drawings to explain things - no formal presentation is required.

- Work-in-progress updates: You might want to share what you have been working on over the last few weeks or months as a rough sketch. The project must not be finished already. Tell your colleagues about your thoughts on how to tackle your specific problem and what the difficulties are.

- General tutorials or overviews: These tutorials could cover topics of general interest for the whole group. This might include general architectures (Transformers/Large Language Models, Diffusion Models, NERFs, …), typical sub-tasks (Visual Odometry/Servoing, Depth Estimation, Long-Horizon Planning, …), or other concepts of relevance to the robotics community (Object-Centric Learning, Active Learning, Scene Graphs, Multi-Modal Learning, …)

Each presenter can choose the modality of their talk. Try to create a presentation and discussion that helps you as much as every person attending. The target audience consists of Professors, PostDocs, PhDs, and interested Master's students. Please find someone to swap with if you can’t make it on your date.

To stay informed about all future instances, sign up for the mailing list.