Seminar: Robot Learning

Prof. Dr. Abhinav Valada

Co-Organizers:

Eugenio Chisari Nikhil Gosala Adrian Röfer Rohit Mohan Jose Arce Nick Heppert

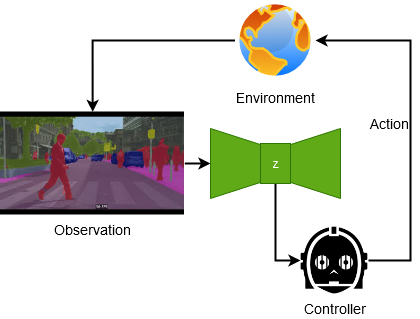

Deep learning has become a key enabler of real world autonomous systems. Due to the significant advancement in deep learning, these systems are able to learn various tasks end-to-end, including for perception, state estimation, and control, thereby making important progress in object manipulation, scene understanding, visual recognition, object tracking, and learning-based control, amongst others. In this seminar, we will study a selection of state-of-the-art works that propose deep learning techniques for tackling various challenges in autonomous systems. In particular, we will analyze contributions in architecture design, and techniques selection that also include computer vision, reinforcement learning, imitation learning, and self supervised learning.

Course Information

|

Details:

|

Course Number: 11LE13S-7317-M

Places: 12

Zoom Session Details:

Passcode: Nx0kS9D76

|

|

Course Program:

|

Introduction: 21/10/2022 @ 14:00

How to make a presentation: 20/01/2023 @ 14:00

Block Seminar: 10/02/2023 @ 09:00

|

|

Evaluation Program:

|

Abstract Due Date: 13/01/2023 @ 23:59

Seminar Presentation: 10/02/2023

Summary Due Date: 24/02/2023 @ 23:59

|

|

Requirements:

|

Basic knowledge of Deep Learning or Reinforcement Learning

|

|

Remarks:

|

Topics will be assigned for the seminar via a preference voting. If there are more

interested students than places, places will be assigned based on priority

suggestions

of the HisInOne system and motivation (tested by asking for a short summary of the

preferred paper). The date of registration is irrelevant. In particular, we want to

avoid that students grab a topic and then leave the seminar. Please have a coarse

look

at all available papers to make an informed decision before you commit.

|

Course Material

|

Recordings:

|

Lecture 1: Introduction - Valid Till: 31/10/2023

Lecture 2: How to Make a Good Presentation - Valid Till: 31/10/2023

|

|

Slides:

|

Lecture 1: Introduction

Lecture 2: How to Make a Good Presentation

|

|

Templates:

|

Additional Information

Enrollment Procedure

- Enroll through HISinOne, the course number is 11LE13S-7317-M.

- The registration period for the seminars are from 17/10/2022 to 24/10/2022.

- Attend the introductory session on 21/10/2022 via Zoom (Session details have been provided above).

- Select three papers from the topic list (see below) and complete this form by 24/10/2022.

- Places will be assigned based on priority suggestions of HISInOne and motivation of the student on 27/10/2022.

Evaluation Details

- Students are expected to write an abstract, prepare a 20-minute long presentation and draft a summary.

- The abstract should not exceed two pages

- The seminar will be held as a "Blockseminar"

- The slides of your presentation should be discussed with the supervisor two weeks before the Blockseminar.

- The summary should not exceed seven pages (excluding bibliography and images). Significantly longer summaries will not be accepted.

- Ensure you cite all work you use including images and illustrations. Where possible, try to use your own illustrations.

- The final grade is based on the oral presentation, the written abstract, the summary, and participation in the blockseminar.

What should the Summary contain?

The summary should address the following questions:

- What is the paper's main contribution and why is it important?

- How does it relate to other techniques in the literature?

- What are the strengths and weaknesses of the paper?

- What would be some interesting follow-up work? Can you suggest any possible improvements in the proposed methods? Are there any further interesting applications that the authors might have overlooked?

Graded Component Submission

- Save your document as a PDF and directly submit it to your topic supervisor via email.

- The filename should be in the format "FirstName_LastName_X.pdf" where X is the evaluation component (Abstract / Summary / Presentation).

Topics

-

Temporal Logic Imitation: Learning Plan-Satisficing Motion Policies from Demonstrations

Supervisor: Adrian Röfer

-

Self-Supervised Learning of Scene-Graph Representations for Robotic Sequential Manipulation Planning

Supervisor: Adrian Röfer

-

Where2Act: From Pixels to Actions for Articulated 3D Objects

Supervisor: Nick Heppert

-

Fit2Form: 3D Generative Model for Robot Gripper Form Design

Supervisor: Nick Heppert

-

CenterSnap: Single-Shot Multi-Object 3D Shape Reconstruction and Categorical 6D Pose and Size Estimation

Supervisor: Eugenio Chisari

-

PERCEIVER-ACTOR: A Multi-Task Transformer for Robotic Manipulation

Supervisor: Eugenio Chisari

-

Lepard: Learning partial point cloud matching in rigid and deformable scenes

Supervisor: José Arce

-

4D-StOP: Panoptic Segmentation of 4D LiDAR using Spatio-temporal Object Proposal Generation and

Aggregation

Supervisor: José Arce

-

CoBEVT: Cooperative Bird’s Eye View Semantic Segmentation with Sparse Transformers

Supervisor: Nikhil Gosala

-

Learning Interpretable End-to-End Vision-Based Motion Planning for Autonomous Driving with Optical Flow

Distillation

Supervisor: Nikhil Gosala

-

Drive&Segment;: Unsupervised Semantic Segmentation of Urban Scenes via Cross-modal Distillation

Supervisor: Rohit Mohan

-

ADAPT: Vision-Language Navigation with Modality-Aligned Action Prompts

Supervisor: Rohit Mohan

Questions?

If you have any questions, please direct them to Eugenio Chisari before the topic allotment, and to your supervisor after you have received your topic.